Behavior-Driven Development (BDD) provides all of the engineering benefits of traditional Test-Driven Development (TDD) while additionally resulting in a specification that non-developers can read and validate. At its heart, BDD transforms the tests of TDD into specifications. Those specifications are expressed in English sentences that are expressed in business value as opposed to coding or engineering terms.

The most popular structure for BDD today is called the Gherkin format and follows a Given/When/Then format, like…

Given a new bowling game

When all frames are strikes

Then the score should be 300

There are frameworks like SpecFlow to help you arrange your specifications (test) into this format. However, I find this format awkward and forced. I prefer the simpler format known as Context/Specification (aka When/Should)…

When all frames are strikes

Should have a score of 300

There are frameworks, like MSpec, that attempt to make the specifications read more like English sentences. However, I find that these frameworks get in the way as much as they help. It is also nice to be able to write readable tests with just PONU (plain old NUnit). Over time, I’ve developed a convention that I find easy to write and easy to read. I’ve also developed a tool that turns the tests into a markdown file that can be turned into a pretty HTML report.

To show the approach at work, I present some snippets from a hypothetical order pricing system I created as a “developer test” provided by a prospective employer last summer. Here is what I was given:

|

Instructions: Build a system that will meet the following requirements. You may make assumptions if any requirements are ambiguous or vague but you must state the assumptions in your submission.

Overview: You will be building an order calculator that will provide tax and totals. The calculator will need to account for promotions, coupons, various tax rule, etc… You may assume that the database and data-access is already developed and may mock the data-access system. No UI elements will be built for this test.

Main Business Entities:

- Order: A set of products purchased by a customer.

- Product: A specific item a customer may purchase.

- Coupon: A discount for a specific product valid for a specified date range.

- Promotion: A business wide discount on all products valid for a specified date range.

*Not all entities are listed – you may need to create additional models to complete the system.

Business Rules:

- Tax is calculated per state as one of the following:

- A simple percentage of the order total.

- A flat amount per sale.

- Products categorized as ‘Luxury Items’ are taxed at twice the normal rate in the following states

- Tax is normally calculated after applying coupons and promotional discounts. However, in the following states, the tax must be calculated prior to applying the discount:

- In CA, military members do not pay tax.

Requirements:

Adhering to the business rules stated previously:

- The system shall calculate the total cost of an order.

- The system shall calculate the pre-tax cost of an order.

- The system shall calculate the tax amount of an order.

Deliverables:

- A .NET solution (you may choose either C# or VB) containing the source code implementing the business rules.

- Unit tests (you may choose the unit testing framework).

- A list of assumptions made during the implementation and a relative assessment of risk associated with those assumptions.

|

You can see that there are quite a few specifications here. It’s a perfect scenario for a BDD approach. Lets take a look at the specification that most states charge taxes on the discounted price, while a few states require taxes to be calculated on the original price.

Here is a specification that a standard tax state calculates taxes on the discounted price…

namespace Acme.Tests.ConcerningCoupons

{

[TestFixture]

public class When_coupon_is_applied_to_item_on_order_in_standard_tax_state

{

private Order _order;

[TestFixtureSetUp] public void Context()

{

Product product = new Product(10);

Coupon coupon = CreateCoupon.For(product).WithDiscountOf(.5m);

_order = CreateOrder.Of(product).Apply(coupon).In(StateOf.NC);

}

[Test] public void Should_calculate_tax_on_discounted_price()

{

_order.Tax.ShouldEqual(.25m);

}

}

}

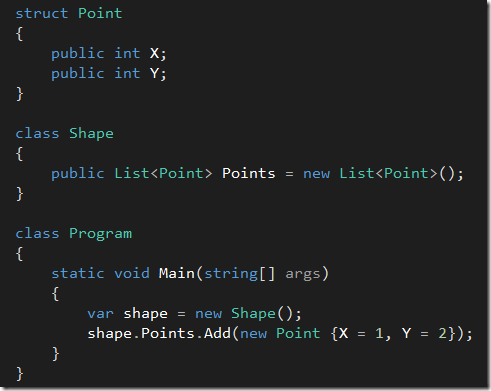

You can see that the test fixture class name defines the context (the when) The test method name specifies the specification (the should). The Context method sets us the context in the class name. Also note the ConcerningCoupons in the namespace. This allows us to categorize the specification.

Here is the code that specifies the prediscount tax states…

namespace Acme.Tests.ConcerningCoupons

{

[TestFixture]

public class When_coupon_is_applied_to_item_on_order_in_prediscount_tax_state

{

private Order _order;

[TestFixtureSetUp] public void Context()

{

Product product = new Product(10);

Coupon coupon = CreateCoupon.For(product).WithDiscountOf(.5m);

_order = CreateOrder.Of(product).Apply(coupon).In(StateOf.FL);

}

[Test] public void Should_calculate_tax_on_full_price()

{

_order.Tax.ShouldEqual(.50m);

}

}

}

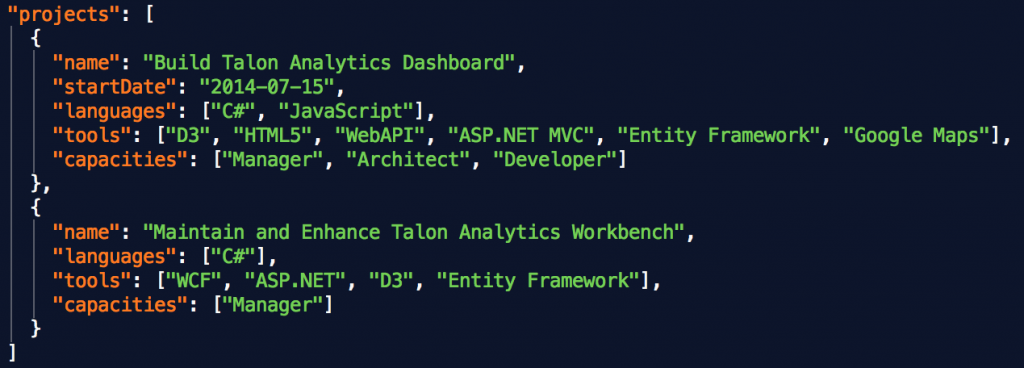

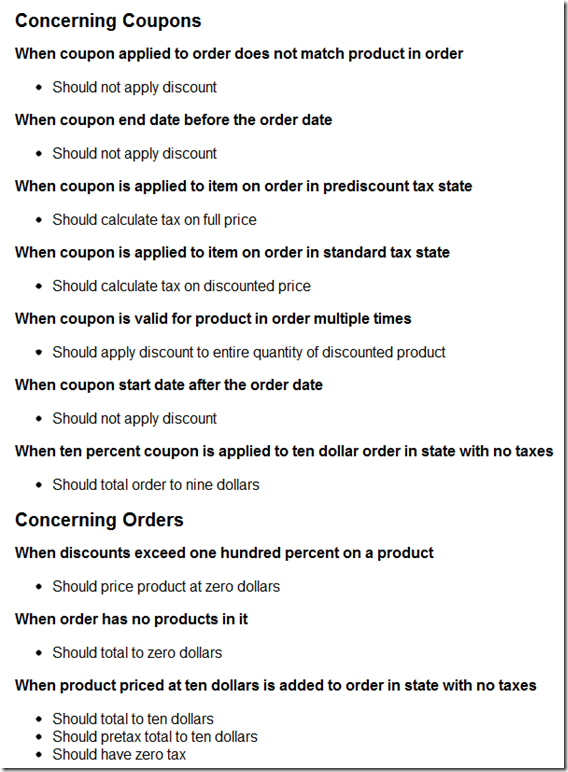

Now take a look at a section of the report generated from the tests…

Anyone can now compare the generated report to the original specification to verify we hit the mark. It’s a little more work to structure your tests this way, but the benefits are worth it.

The full source for the sample and the report generator are available here.