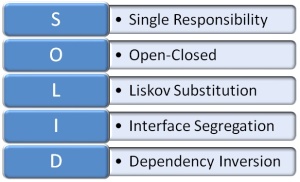

Sometime around 2001, I came across a series of articles by Robert C. Martin. At that time they were already a little old—from The C++ Report in 1996. I didn’t really care. This guy (who I now know as everyone else does as “Uncle Bob”) had captured and expressed core principles that I knew from experience were the key to creating maintainable software. I mostly knew it mostly from having violated them and paying the price.

The principles put forth in those articles express fundamentals truths that are in play in any Object-Oriented software project.

In the fifth article in the series, Martin sums up the first four…

1. The Open Closed Principle. (OPC) January, 1996. This article discussed the notion that a software module that is designed to be reusable, maintainable and robust must be extensible without requiring change. Such modules can be created in C++ by using abstract classes. The algorithms embedded in those classes make use of pure virtual functions and can therefore be extended by deriving concrete classes that implement those pure virtual function in different ways. The net result is a set of functions written in abstract classes that can be reused in different detailed contexts and are not affected by changes to those contexts.

2. The Liskov Substitution Principle. (LSP) March, 1996. Sometimes known as “Design by Contract”. This principle describes a system of constraints for the use of public inheritance in C++. The principle says that any function which uses a base class must not be confused when a derived class is substituted for the base class. This article showed how difficult this principle is to conform to, and described some of the subtle traps that the software designer can get into that affect reusability and maintainability.

3. The Dependency Inversion Principle. (DIP) May, 1996. This principle describes the overall structure of a well designed object-oriented application. The principle states that the modules that implement high level policy should not depend upon the modules that implement low level details. Rather both high level policy and low level details should depend upon abstractions. When this principle is adhered to, both the high level policy modules, and the low level detail modules will be reusable and maintainable.

4. The Interface Segregation Principle. (ISP) Aug, 1996. This principle deals with the disadvantages of “fat” interfaces. Classes that have “fat” interfaces are classes whose interfaces are not cohesive. In other words, the interfaces of the class can be broken up into groups of member functions. Each group serves a different set of clients. Thus some clients use one group of member functions, and other clients use the other groups.

The ISP acknowledges that there are objects that require non-cohesive interfaces; however it suggests that clients should not know about them as a single class. Instead, clients should know about abstract base classes that have cohesive interfaces; and which are multiply inherited into the concrete class that describes the non-cohesive object.

Martin later published The Single Responsibility Principle, which says that there should never be more than one reason for a class to change.

There were several aspects of these short articles that helped me so much…

- The articles were short but clear.

- The articles were available on-line for anyone to download and read.

- Martin gave these principles names.

All of these aspects made these principles easy to promote—and I did! I preached all of these principles, whether in C++ or later C#. So, I am particularly pleased to see the recent resurgence in these principles as the ALT.NET crowd has arranged them into a nice acronym (SOLID) and made back-to-basics cool again. We see the SOLID principles all over blogs and articles in the agile community. It reaffirms my belief that the fundamentals may go in and out of style, but they are always what matters most in good software development.