Being able to push Raven data out to a SQL-based data repository for reporting will be a huge boost to RavenDB adoption. The Index Replication Bundle does a great job of solving that problem, but I found the documentation to be a little weak.

Being able to push Raven data out to a SQL-based data repository for reporting will be a huge boost to RavenDB adoption. The Index Replication Bundle does a great job of solving that problem, but I found the documentation to be a little weak.

I created a minimal program that implemented it for myself and decided to post it to GitHub so someone else looking to experiment with the Index Replication Bundle can get started more smoothly. This Stack Overflow question was helpful along the way.

This sample has a simple Raven store with a collection of trivial documents. Those documents are directly reflected out to SQL Server for reporting purposes. Once you have all of the necessary plumbing worked out, making the mapping between Raven and SQL Server more sophisticated is just a matter of making the indices more complicated.

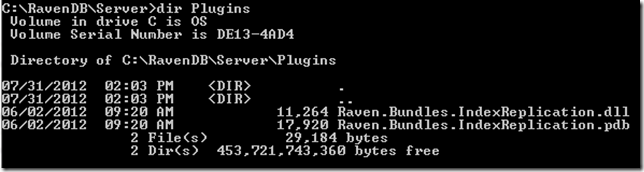

Install The Plugin

Create a “Plugins” directory under the directory where Raven.Server.exe resides. Copy Raven.Bundles.IndexReplication.dll there…

Configure the Connection String

Add a connection string to Raven.Server.exe.config…

<connectionStrings> <add name="Reports" providerName="System.Data.SqlClient" connectionString="Data Source=(local);Initial Catalog=Sample;Integrated Security=SSPI;"/> </connectionStrings>

Create a database in SQL Server called “Sample”. The rest of the steps are implemented in the sample program.

Create Table

A bit of code to create the table in SQL Server…

using (SqlConnection connection = new SqlConnection(_sqlConnectionStringTextBox.Text)) { connection.Open(); SqlCommand createTable = new SqlCommand(@" CREATE TABLE [dbo].[Dogs]( [Id] nvarchar(64) NULL, [Name] nchar(255) NULL, [Breed] nchar(255) NULL) ON [PRIMARY]", connection); createTable.ExecuteNonQuery(); connection.Close(); }

Create Index

Next, we create a Raven index…

public class Dogs_List : AbstractIndexCreationTask<Dog> { public Dogs_List() { Map = dogs => from d in dogs select new { Breed = d.Breed, Name = d.Name }; } }

Configure Replication

Applying the replication configuration described in the docs was not clear to me. It is simple once you see it, but not necessarily obvious…

var replicationDocument = new Raven.Bundles.IndexReplication.Data.IndexReplicationDestination { Id = "Raven/IndexReplication/Dogs/List", ColumnsMapping = { { "Name", "Name" }, { "Breed", "Breed" } }, ConnectionStringName = "Reports", PrimaryKeyColumnName = "Id", TableName = "Dogs" }; using (var store = new DocumentStore { Url = _ravenUrlTextBox.Text }) { store.Initialize(); store.DatabaseCommands.EnsureDatabaseExists(RavenDatabaseName); using (var session = store.OpenSession(RavenDatabaseName)) { session.Store(replicationDocument); session.SaveChanges(); } }

Add Items to Raven

Now, once you add items to Raven, they should appear in the Dogs table in SQL Server.

If you don’t see them, try turning debug logging on.