Interviewing developers has given me an interesting, although not scientific, sampling of the thinking and mindset of software developers in the Atlanta area. While there have been a few bright spots, most of what I’ve learned has been disheartening.

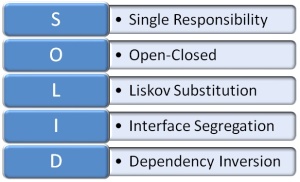

I have found that TDD is still only an idea that most developers have heard about but never practiced. Of the ones who have practiced it, almost none of them understand what TDD was meant to achieve and only view it as automated unit testing. I believe this is due to two factors working together derail the original intent of TDD. First, TDD was named poorly. “Test” has specific meaning and has for a long time. It naturally biases us toward a certain type of thinking (strangely enough… testing and quality assurance). Second, most developers and development organizations would rather apply a formula or recipe to what they do than to take the time and effort to deeply understand what makes software development success or failure (and all of the degrees in between).

Scott Bellware is the guy who first got me thinking about a new way to approach TDD. It started with a new name: Behavior Driven Development (BDD). I actually think the “D” should be changed to Design to complete the mind shift. In a nutshell, BDD attempts to get back to the XP view of the tests being documentation of what the code should do. In this way of thinking, the set-up code is referred to as context and the assertions are referred to as specification. It has helped reform my thinking about TDD for the better.

You can catch Scott pontificating on the subject on the latest Hanselminutes podcast. Scott doesn’t get as specific as I would have liked, but this interview shows the side of Scott that I like so much. He’s passionately advocating a valuable practice and philosophy without the insults and vitriol that he is prone to fall into. I highly recommend it.

[Update: My reference to “Scott” in the above paragraph is to Bellware–not Hanselman, who is always pleasant and rarely vitriolic.]

As I’ve made my transition from the Microsoft desktop platform (x86, Windows, .NET, C#, etc.) to the world of handheld devices (ARM, Windows CE, Windows Mobile, .NET CF, etc.), there have been a number of fairly basic things that I’ve had to learn the hard way. They are so basic to being productive in the compact world that there ought to be a brief guide to bring an experienced desktop developer up to speed in short order. I didn’t come across that; so, I intend to provide that here for the next guy (or gal) who comes this way.

As I’ve made my transition from the Microsoft desktop platform (x86, Windows, .NET, C#, etc.) to the world of handheld devices (ARM, Windows CE, Windows Mobile, .NET CF, etc.), there have been a number of fairly basic things that I’ve had to learn the hard way. They are so basic to being productive in the compact world that there ought to be a brief guide to bring an experienced desktop developer up to speed in short order. I didn’t come across that; so, I intend to provide that here for the next guy (or gal) who comes this way. The sorts of things I plan (so far) to cover include topic like… What is CE and how does it relate to Windows Mobile? What is the memory model on CE? What are the memory limitations and strategies for working within and around them? What do you have to do to run your .NET code on the handheld device? How do you do unit testing with CF code? I’m also going to document a few potholes that initially slowed me down.

The sorts of things I plan (so far) to cover include topic like… What is CE and how does it relate to Windows Mobile? What is the memory model on CE? What are the memory limitations and strategies for working within and around them? What do you have to do to run your .NET code on the handheld device? How do you do unit testing with CF code? I’m also going to document a few potholes that initially slowed me down.