I just came across the best magazine-article-length summary of DDD that I’ve ever seen. If you’ve heard of DDD, but not ready to commit to reading the whole Blue Book, check out Dan Haywood’s An Introduction to Domain Driven Design. It is clear, concise, and remarkably comprehensive for all of it’s brevity. It also makes for a great refresher.

The Commoditization of Transactions

I’ve written in the past about how the relentless march of progress in software has made yesterday’s innovations today’s commodity.

This article from HBR captures the essence of how the software landscape is in the process of making traditional, data-oriented, system-of-record, transactional systems into commodity systems. Companies that have made a living with these traditional systems are going to wake up very shortly and find that their customers have become someone else’s community participants.

The combination of ubiquitous mobile connection, cloud computing, and the general adoption of social media is in the process of changing the expectations of software users. Keeping their data safe is no longer enough. They now expect (or soon will) a more immersive experience.

This doesn’t mean a link to a Facebook fan page that is merely a shallow marketing ploy. Users expect that their hosted application will allow them to interact with all of the other uses of your software (see Spiceworks as the best example of this). They expect that the software they run their business on enlists them into the community of users of your software. Don’t have a “community” of users? Your competition soon will.

DDD Anti-Pattern #4: Allowing Implementation Decisions to Drive the Domain Model

This is the last (for now) in my series of lessons learned building a complex product from the ground up following the principles of Domain Driven Design.

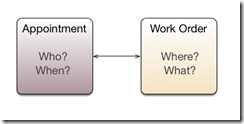

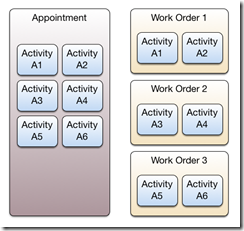

The Field Service domain is all about getting people to Locations to perform Activities. The Activities and the Location are defined by a Work Order. The person performing the Activities (and possibly the time) are defined by an Appointment. You can think of the Work Order as the what and where and the Appointment as the who and when.

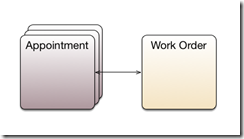

The model is simple as long as there is one Work Order and one Appointment. In fact, you’d be tempted to combine them into one entity. However, things get more complicated when you have one Work Order that requires multiple Appointments to complete it. It might be two technicians at one time or the same technician on two different visits or multiple technicians over multiple visits.

In these cases, we are splitting the Activities of the Work Order over multiple Appointments. Some of the Activities are associated with one Appointment and some with another.

The Work Order is complete when all Activities over all Appointments are complete. Not too complicated.

Now also imagine that a technician goes out on one Appointment but services more than Work Order.

In simplest terms, we have a many-to-many between Appointments and Work Orders. You can imagine the twisted case where a given Appointment services two different Work Orders, and each of those Work Orders has other Appointments serving them.

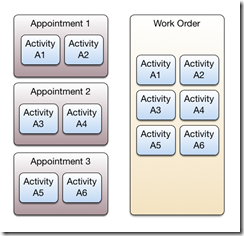

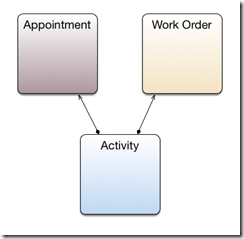

We’ve all implemented many-to-many relationships in databases and in object models. However, this situation is a little different because of the role of the Activity. The linkage between the Appointment and the Work Order is through their association with a common Activity.

A given Activity is associated with one Work Order and one Appointment. We can now traverse that relationship to discover the relationship between Appointment and Work Order.

A given Activity is associated with one Work Order and one Appointment. We can now traverse that relationship to discover the relationship between Appointment and Work Order.

We simply have Work Orders with collections of Activities and Appointments with collections of Activities. With that, the complex relationship between Appointment and Work Order is completely modeled, with no redundant connections (like something directly linking Appointments and Work Orders). I thought this was pretty cool. And it was.

But here is where the problem comes in… In the real world, Appointments really are associated with Work Orders—and not just by the happenstance of common Activities. (One could argue this actually is true because you would only schedule an Appointment to service a Work Order if there was some Activity to perform, but this is not how anyone thinks about the relationship.)

With our model, to answer the question “Where does this Appointment take place?” we have to go to one of the Activities in our collection, navigate to its Work Order, and look at its Location property. This not a big deal to do in code, and we can even put a property on the Appointment that hides this messiness and gives the illusion that an Appointment has a Location. But this is not the only difficulty.

Try looking in our database to see what Work Order a given Appointment is associated with. You have to go to the Activity table and find all the Activities associated with this Work Order, then look at the Work Order column of those Activities to find the ID(s) of the Work Order(s). One day I tried to create a view that shows an Appointment and its first Work Order (there was only ever one Work Oder per Appointment in practice). I gave up after 20 minutes.

Now imagine explaining this model to a programmer trying to integrate with our system. Its possible, but there is much more confusion than you’d like.

We had a technically tight and elegant implementation, but it ended up obscuring one of the most fundamental relationships in our domain.

Remedy

As much as we liked the normalization of our current implementation, the team decided that it would be better to model the relationship between Appointment and Work Order explicitly. If we had focused more on the domain and less on the cleverness of the implementation, we could have avoided this rework of the design.

11 Reasons You Want Mobility Experience Before Building a Mobile HTML5 Application

Two forces have converged: 1) Mobility has gone from an optional differentiator to an expected component of any software offering, and 2) HTML5 has been crowned as the solution that will solve the cross-platform problem that Java, Flash, and Silverlight failed to solve before it.

This collision of forces has turned HTML5 into a buzzword with a life of its own. In fact, it appears to be on its way to becoming as detached from reality as the all-time-champion of promising technology turned meaningless buzzword: SOA.

Don’t get me wrong, I believe HTML5 is the best current answer to cross-platform mobile software. Before recommending to my executive management that we build our cross-platform offering in JavaScript, using the family of features loosely known as HTML5, I looked at pure native, cross-platform native with Mono, frameworks like Titanium. I made that recommendation before HTML5 became the cool thing to do, and I haven’t regretted it for a second.

A sure sign that a technology has reached fad level is when articles start to appear pointing out that said new technology will not, in fact, usher in world peace. Such an article was the popular 11 hard truths about HTML5.

The title was a little ominous and I began to read it with some trepidation, as we were still a couple of months away from being ready to ship our HTML5 client application. However, as I read, I was comforted by the fact that although all 11 truths were valid challenges, our team had faced and dealt with each of them.

The article lays out eleven challenges when building an HTML5 application:

- Security is a nightmare.

- Local data storage is limited

- Local data can be manipulated.

- Offline apps are a nightmare to sync.

- The cloud owes you nothing.

- Forced upgrades aren’t for everyone.

- Web Workers offer no prioritization.

- Format incompatibilities abound.

- Implementations are browser-dependent.

- Hardware idiosyncrasies bring new challenges.

- Politics as usual.

It’s a scary list, and they are all true. My team was able to handle and mitigate each of these challenges largely because we had years of experience building native mobile applications and desktop Web applications and much of what we learned there applied in the HTML5 world.

If you don’t have solid answers for each of these challenges, you really ought to get someone on the team who has confidence in dealing with each of them.

You can check my current availability here.

Sprint Planning and Decision Fatigue

This article explores the physiological and psychological effects of fatigue brought on by making decisions. The fatigue that comes from making decision after decision immediately reminded me of my team’s Scrum sprint planning days.

The Scrum method breaks software development into iterative cycles called sprints. Our sprints were the highly typical two weeks in length. The idea is that two weeks is a short enough planning horizon that we can pull in enough work from the backlog to fill that time period. Then we demo what we’ve done to the various stakeholders in the company, adjust existing backlog priorities, plan another sprint, and on it goes.

Sprint planning day looked something like this…

9:00 Demos (any stories that haven’t been shown yet)

9:30 Close out the Previous Sprint (closing stories in VersionOne, splitting any unfinished stories, etc.)

10:00 Retrospective (look back over the prior sprint and identify things that worked well that we want to do more of, what didn’t work so well, and identify any impediments to progress)

10:45 Start Sprint Planning (Story Breakdown)

1:30 Finish Sprint Planning (Story Breakdown)

We often wouldn’t finish sprint planning until after 4:30.

Story Breakdown

Sprint planning is the process of taking the high-level stories and breaking them down into tasks. We did this as a team; so, we had everyone’s input and everyone knew how we were going to go about implementing each story. This is vital to maintaining a team approach to building the product.

This story breakdown, however, is the hardest part of the whole sprint. We have to make decision after decision about how we are going to implement a feature…

Will there be a new database table? Will it be a variant of some existing feature or something new? Is there some new UI element that we haven’t tackled before? and so on.

Then for every decision, we have one more decision: how long do we think it will take.

I believe the hardest part is that we move from one decision to the next without actually doing anything. We are simply adding our decisions to the inventory to be acted on over the next two week. This makes the decision fatigue factor even greater.

By the time we got to 3:00 or 3:30 the team would often be so fatigued that we would start placing two tasks on each story: Plan it and Do it. During the sprint, if we came across a story with a “Do it” task, it was a safe bet that it was planned late in the day.

Remedy

I can see two ways to reduce the decision fatigue that comes with Scrum planning day: 1) reduce the Sprint length, or 2) don’t do Scrum.

For most teams doing Scrum, I think shrinking the Sprint length to one week will reduce the planning day fatigue. Our team, for other reasons, switched to a Kanban continuous flow model. Under that model, we did the story breakdown as the queue of planned stories got low. It was never two weeks worth at one time, and we had fewer “Do it” tasks.

DDD Anti-Pattern #3: Not Taking Bounded Contexts Seriously

My team had the challenge of building a field service automation application that would run on Windows Mobile devices. Availability of the Compact Framework meant that most code that would run on the server would also run on the device. This meant that we could build one domain object model and deploy it on the server and on the client. But in Jurassic Park fashion, we were so preoccupied with the fact that we could, we didn’t spend enough time asking if we should.

The Advantage We Got

We used the Repository pattern and built our domain model out of plain old CLR objects (POCOs). This meant that we could have the same rich domain model both on the client where the user is interacting through a UI and on the sever where changes from the field and from host system integrations are being processed. This allowed us to keep our code DRY. There was no repetition among the entities, behaviors, relationships between entities, and tests. We never had to worry about model inconsistencies between the client and the server. It seemed like a big win, and in some ways it was.

The Price We Paid

As we built out the product, we saw that the object model’s usage patterns were not the same on the client as on the server. We ended up with behavior that only applied on the client or on the server.

In an effort to keep from putting code into our domain that would not be relevant on both the client and server, we started putting logic into little services that work with the domain. Most of this logic was on the client side and got applied through use of the Event Aggregator pattern. This worked, but tended to impoverish the domain model itself.

Fixing It

The problem largely fixed itself when Windows Mobile became irrelevant almost overnight. We rebuilt the client in JavaScript that runs on most modern browsers. This gave us a do-over opportunity and removed the option of sharing model code between the server and client.

If I had the Windows Mobile situation to do over again, I would be more careful to define a common structure that the client and server could share, but compose behaviors relevant to the client and server within each of those contexts.

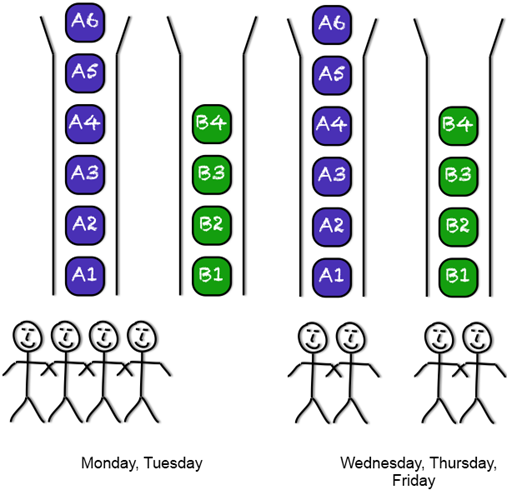

Split the Team or Split the Backlog?

Small software companies often find themselves trying to do too much with too little. This was certainly the case at Agentek. At one point earlier this year, we had a problem… We were not finished with the current release (call it release A), but we could not wait until it was finished to get a start on our next release (call it release B). There was too much unknown involved in release B. We had to get started on it. At the same time, we had just committed to ourselves that we would not leave our customers with anymore half-finished releases. What to do?

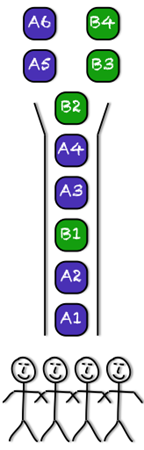

Our team had four fully dedicated developers, a tester, and me. We needed to dedicate 25% of our time to release B. The first option was to simply intersperse the backlog with stories from release A and release B…

Interspersed Backlog

There were two problems with the interspersed backlog. First, release A has a good bit of reactive work; so, the backlog is unpredictable and tends to consume the entire team’s attention. Second, since stories vary in actual effort to complete, we cannot really gauge or control what percentage of our capacity is applied to each effort.

The next idea, was to split the team and apply 25% of the people to release B….

Split the Team

This is the mathematically cleanest solution and the option that traditional software managers would probably pick every time. However, this option has major disadvantages…

- Only one developer will know anything about how release B was implemented.

- The many advantages of pairing are lost on both efforts because we have only one developer on release B and an odd number on release A.

This option really just throws the team-based approach to building software out the window; so, not an option for us.

Next thought was to create two separate backlogs….

Split the Backlog

This makes the problem we are trying to solve clearer, but we still have the problem of how do we stay united as a team, yet timebox each backlog.

The next step was to designate days of the week for servicing each backlog. To give the forward-looking release one fourth of our capacity, we dedicated one pair to that backlog for half of the week. To simplify things, and give it a little more than 25%, went ahead and gave it three full days instead of two and a half. So this is what things looked like…

Split the Backlog and the Week

We rotated the release B pair so that there would always be one person that worked on that last week for continuity and one new person.

In our first retrospective after we finished release A (and moved onto release B fulltime), the team was convinced of a few things:

- One team working on two releases at once is hard.

- Keeping the team together was really important.

- Splitting the backlogs and the week turned out to be a great way to do both at the same time.

DDD Anti-Pattern #2: Not Getting the Whole Team Educated on DDD Early Enough

The two challenges that drew me to Agentek in late 2008 were interrelated in the same way that the proverbial chicken and egg are. We had to build a complex, composite, occasionally connected, enterprise mobility application to replace the prior practice of custom, one-off solutions. At the same time, we had to bring the existing development group up to speed on current software techniques, practices, patterns, and processes.

I couldn’t hold off on designing and building the new product until everyone had gotten the DDD religion. In reality, I don’t think I would have done that even if I had the luxury of that kind of time. Concepts like DDD are best learned by doing them with someone who has done it before. Reading books and hearing presentations can get you excited about them, but only doing them helps you actually learn them.

As I prepared for the first meetings with the domain experts (folks from across the organization who had implemented multiple custom solutions and had a good sense of the domain of our new product), I sent out a copy of Domain Driven Design Quickly. I wanted to give them as much of an idea of what I was after as possible up front, but it mostly came down to me explaining it as we were doing it.

We had some great sessions. The white board photos we made in those early days formed a remarkably useful and resilient core of the domain model that is still reflected in the product today. We hashed out much of the [soon to be] ubiquitous language, and we all had a vision of what the new product would be.

Shortly after this, we hired a big DDD advocate (I’m still amazed at our good fortune in finding him. They are rare now, even more so in 2009). With Jarrel and me on the development team, we spread the DDD mindset organically as we built out the product. This seemed like a good and pragmatic approach.

In late 2010/early 2011, the entire development organization went through the blue book, chapter by chapter, discussing the pros and cons of Evans’ approach, where we’ve been effective, and where we haven’t.

What I learned was loud and clear: We would have had a better product and stayed out of the technological weeds much better if we had formally gone through the DDD material in 2009 instead of 2011. I underestimated how much more effective the team would have been if we took the time to build a common foundation of the DDD concepts. It didn’t mean we had to hold anything up. I just should have made it a priority earlier. I wont make that mistake again.

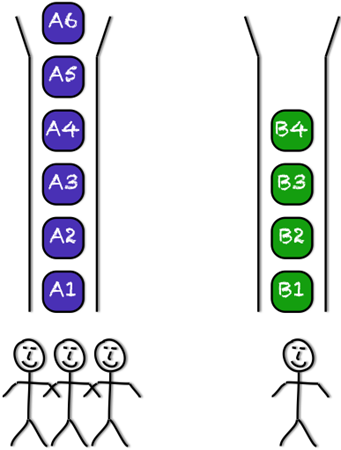

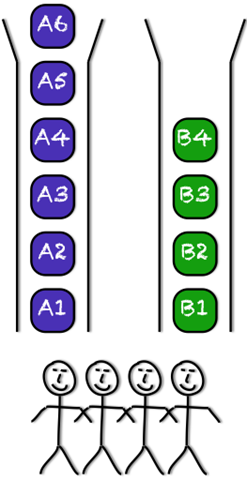

Misconceptions about Team Rooms and Open Floor Plans

I see this far too often. Well-meaning software organizations embracing agile software development tear down the walls in order to open up the space and allow easier collaboration. This sounds great, and it’s cheap. An easy win, right? Not if it’s done without some care and thought.

Premise 1: Irrelevant conversations are distractions.

Human beings are trained to pick other human beings’ voices out of the background noise and pay attention to them. There is little that is more distracting to concentration than hearing a conversation that has nothing to do with what you are working on.

Premise 2: A team is a group of people working toward a common goal.

If a team is really acting as a team, there is nothing that one subset of the team could be working on that is irrelevant to the rest of the team.

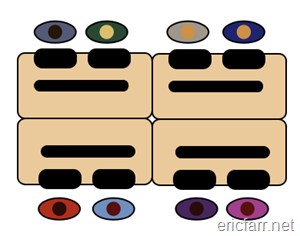

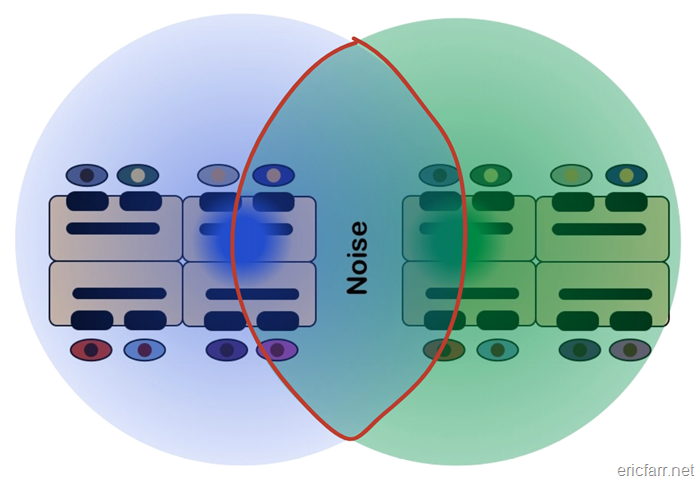

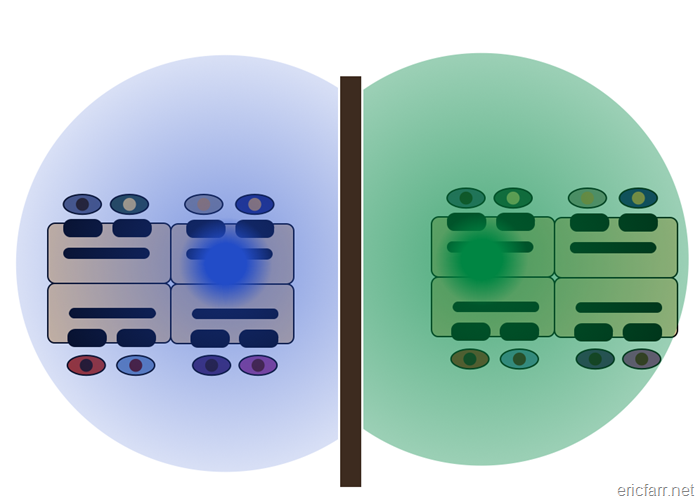

Imagine Team Green working in an open team room, paired at stations with one monitor and dual mice and keyboards…

Let’s say that pair number 1 is having a conversation…

That conversation is heard by all other members of the team. Because Team Green is a team, the fact that the whole team can hear it is a good thing. I cannot count how many times I have seen this…

Pair #1 overhears pair #2 getting stuck on some problem that sounds familiar. Pair #1 stops and gets involved in what pair #2 is doing. The four people discuss the problem and solve it, based largely on some previous experience that someone on pair #1 had. If one pair had not overheard the other pair struggling, they might have wasted a whole day (or at least until the next stand-up).

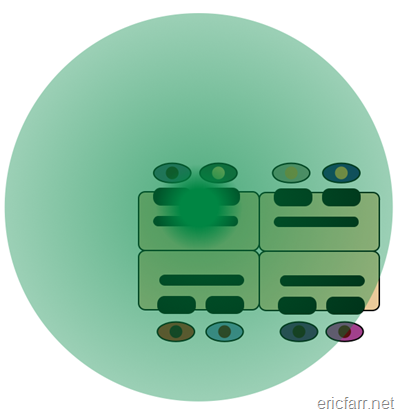

Now imagine Team Green is really busy and all working and talking…

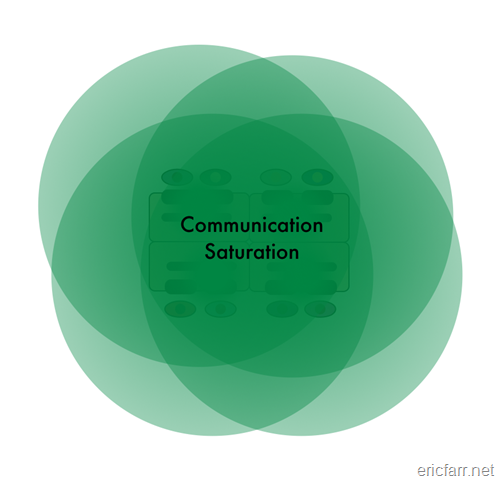

I first heard this situations referred to as communication saturation by Jeff Sutherland, but I think he got it from Jim Coplien. Here we have everyone able to hear every other conversation. If a stranger were to come upon this team area, it would sound like noise. But to a high-performing agile software team, it is completely natural because every conversation is related to furthering the goal for this iteration.

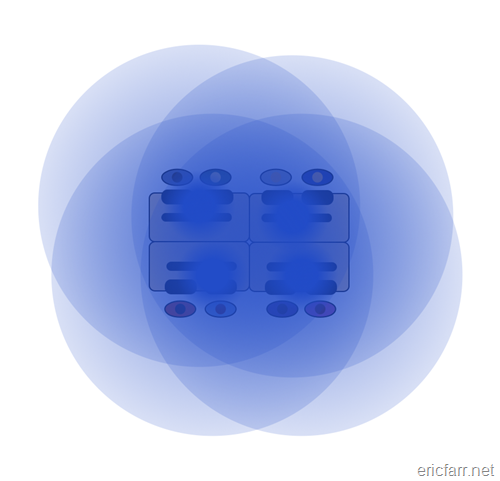

Now imagine we have a collaborative, high-performing Team Blue…

Team Blue is enjoying the same open space benefit that Team Green is. Now imagine we take our open-spaces-are-good momentum and co-locate Team Green and Team Blue…

Now we have green conversations in the blue team area and green conversations in the blue area. This is not good. We now have noise distracting both teams.

If the blue conversations are as useful to the green team as green conversations, then you are not doing team based development.

There are two possible remedies: create more space between the teams, put up acoustically meaningful walls, or both.

The bottom line: open spaces and shared conversations are good only within a team, not between teams.

DDD Anti-Pattern #1: Not Accounting for Commands and Queries as Separate Concerns

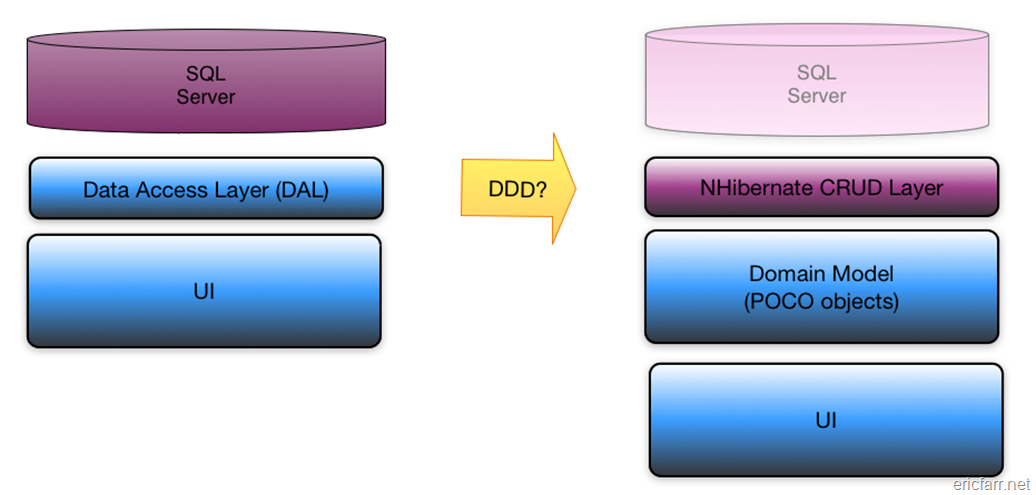

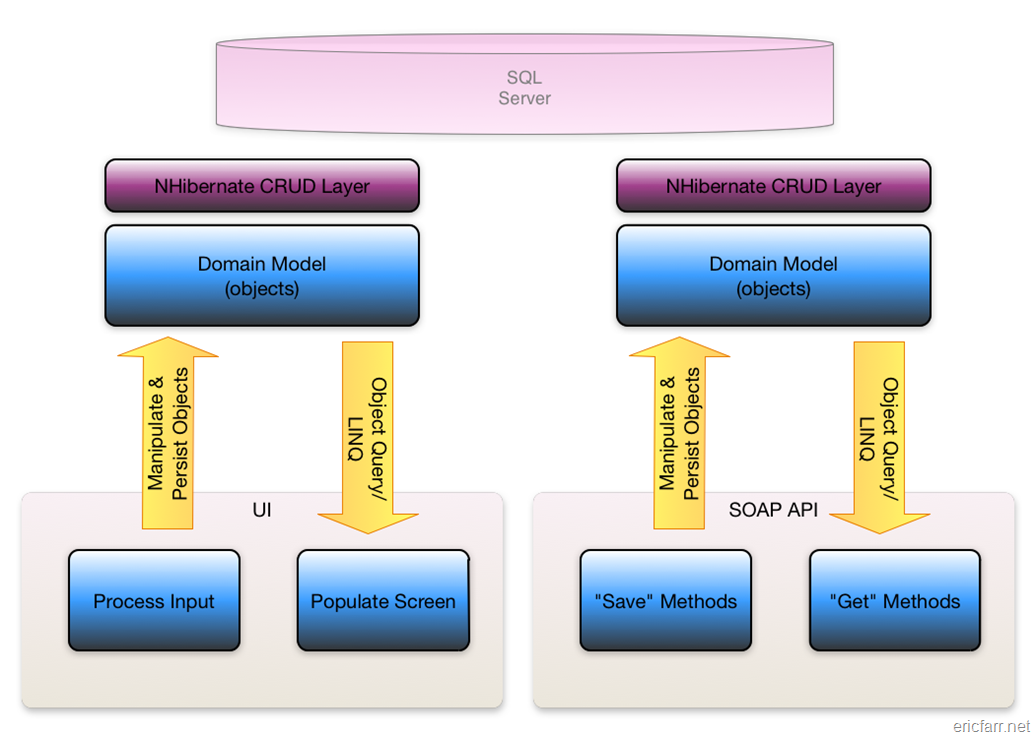

As a long time object-oriented programmer, I was immediately drawn to the DDD concept of Persistence Ignorance. My initial idea of DDD was to simply express the domain concepts in a C# object model, then one one side, map those objects to a persistent store with a technology like NHibernate and on the other side, build a UI without any business logic in it.

Figure 1: The Naïve Transition to DDD

This was a big improvement over the traditional techniques on the Microsoft stack. Databases are inadequate for expressing domain models and business logic in UIs or databases makes unit testing nearly impossible.

How the Naïve DDD Approach Fails

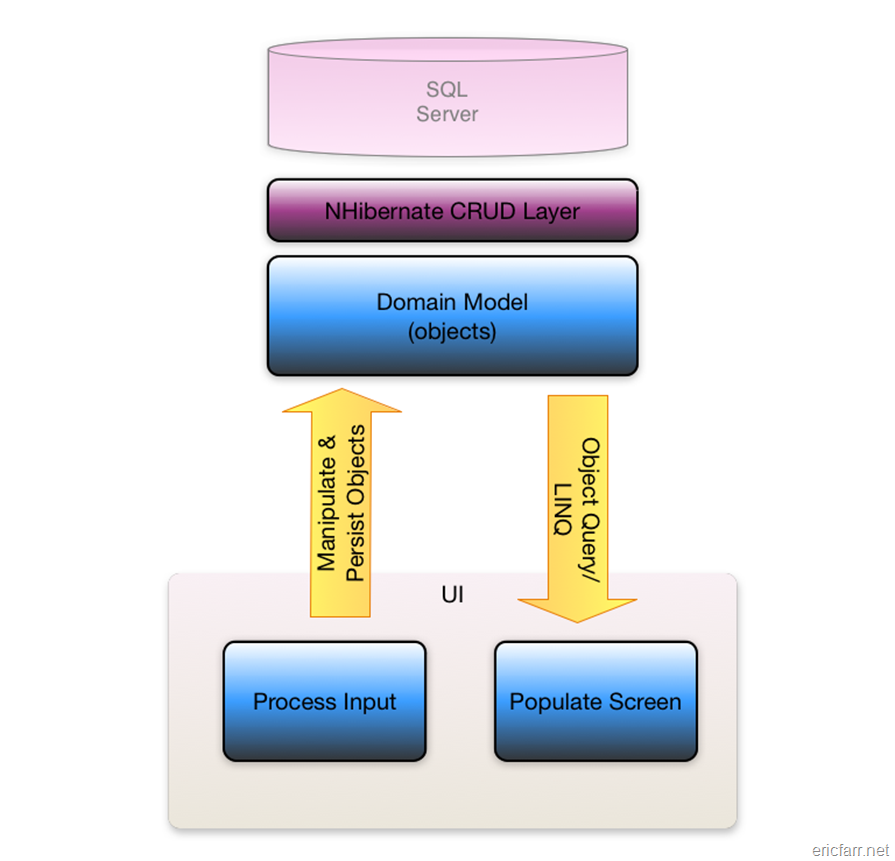

What I didn’t see was that this naïve solution traded one set of problems for another. The new solution looks like Figure 2 below.

Figure 2: The Naïve DDD Approach

This looks OK until you try to build anything remotely complex with this model. The first bit of friction we ran into involved populating some of the screens on the UI. Many screens don’t map smoothly to our rich object model. We get by with technologies like LINQ and lazy loading, but it still feels awkward to spin up a rich object model to simply copy properties to a view.

Things really turn south when we start to have multiple interfaces to the application. Imagine we have our UI and add a SOAP-based API to allow other programs to drive the application.

Figure 3: The Naïve DDD Approach with Multiple Applications

Now the CRUD layer really starts causing trouble. First, notice that because our domain model is defined in an assembly (or package or library), it gets deployed to each separate application that needs it. We have a layered architecture, however, all but the database layer get replicated to multiple applications.

CRUD-Based Models and Meaning

Imagine that a user makes a update to the comments on order 0123 through the UI at the same time that an external program calls GetWorkOrder(“0123”), updates the order description, then passes the updated order into SaveWorkOrder. Now both components are instantiating order 0123, copying the updated property values and saving it back to the database.

We lose the intent of each of those two actors. We are left to sort out any conflicts on simply timing and the intelligence of our ORM tool.

You could avoid the replication in Figure 3 by creating a application server, but you would still have the loss of semantically meaningful information that comes with a CRUD-based object model.

Request/Response: Good for Some Things Not for Others

Our Figure 3 architecture is inherently synchronous request/response. Consider the GetWorkOrder Web service method. Our external system calls that method and gets back the specified work order. This is what we want. The calling code cannot continue until the request comes back.

On the other hand, imagine any method that makes any kind of change to the system (like SaveWorkOrder). We would like to be able to queue these changes and allow the system to process them as compute capacity is available. We also don’t want to have to write code to handle the case where the Web service is temporarily not available.

The Old Way Had Some Things Right

Notice that the bad old traditional system does not exhibit some of these problems:

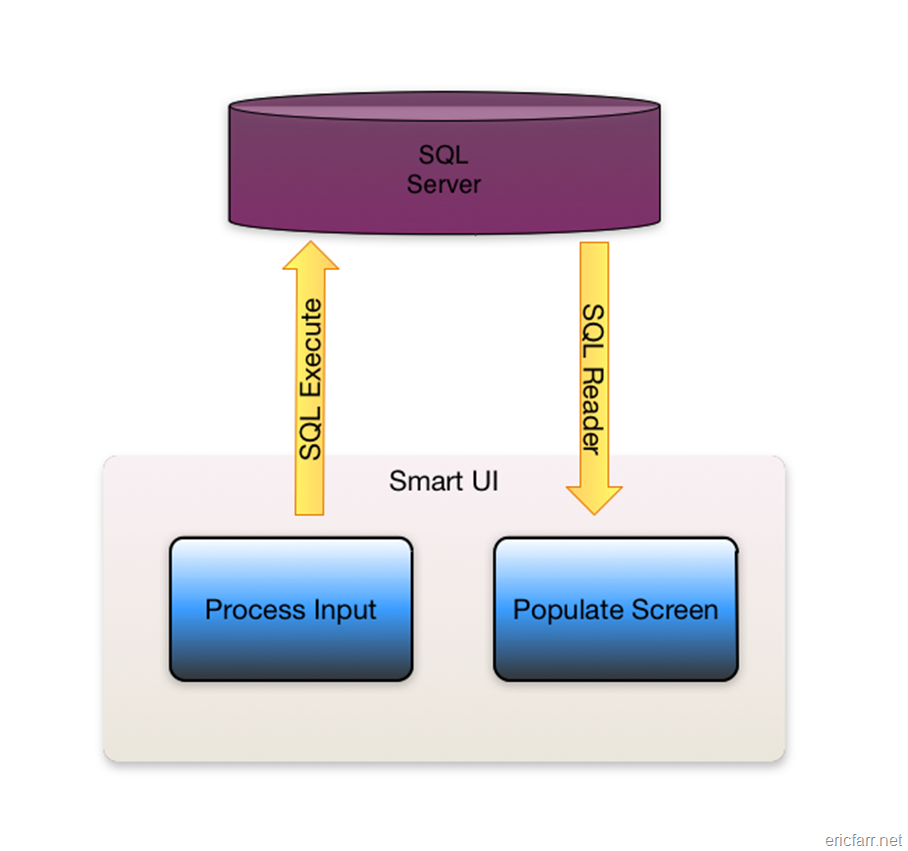

Figure 4: The Old Smart UI Approach

What the traditional system did right was account for updates being different from queries. Reads used SQL queries or stored procedures and views to get exactly what the screen needs—no more, no less. The write side used SQL inserts and updates to make just the changes necessary to accommodate the user’s actions in the UI. You couldn’t test it and you wouldn’t want to be the one to have to maintain it or enhance it, but it performed well and got the job done.

It did, however, have all the same request/response limitations.

Getting it Right with DDD

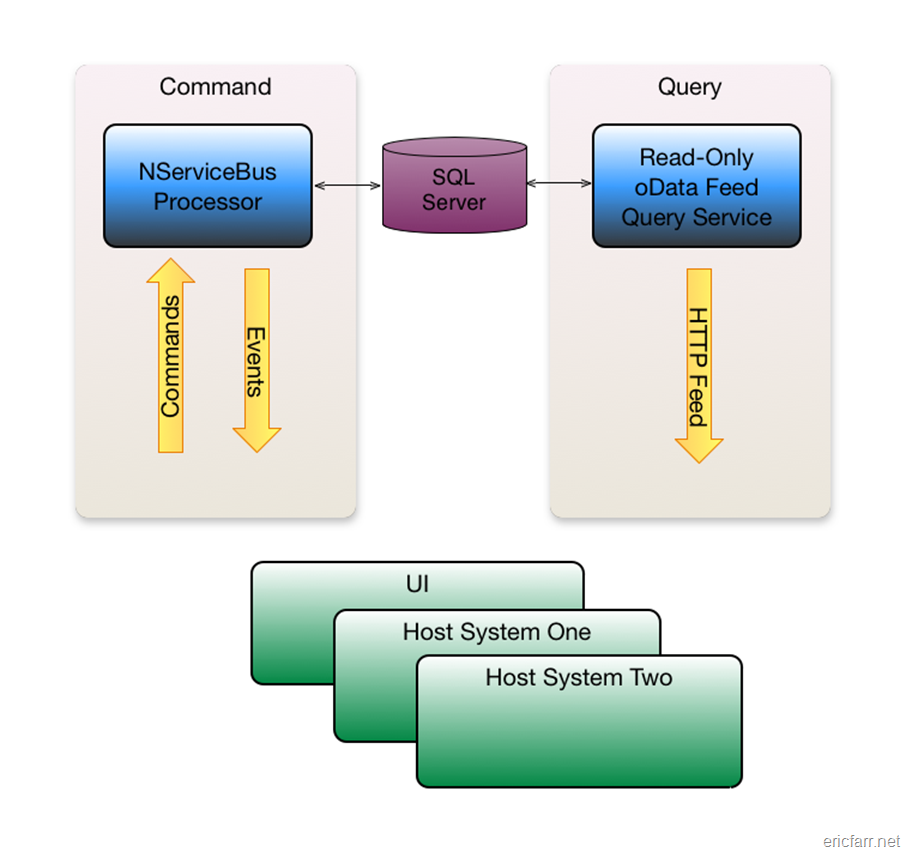

Fixing this problem meant an overhaul of the architecture, but the result was well worth the effort. In a nutshell, we redesigned the system to follow the Command Query Responsibility Segregation (CQRS) pattern.

Now all changes to the system are expressed as commands. Anytime the system changes, one or more events are published. Everything is commands in and events out. For reads, we added an Open Data Protocol feed that allows any application to query the data.

Figure 5: CQRS Design

Each of the applications and integration points is now sending commands to a central processing module (which may be dispatching to several machines). The commands express the precise intent of the sender of the command. So, UpdateWorkOrder becomes SetWorkOrderComment or SetWorkOrderDescription. Sending the command allows semantic meaning of the action to follow all the way through. We now log both the commands and the resulting events, creating a detailed audit trail of what happened within our system. This also allows us to better avoid merge conflicts because changes are more targeted with the specific commands.

Asynchronous Messaging versus Request-Response

Another huge win for this design improvement is in how it can scale. Building out the “write” side of the system with NServiceBus means that we can ditch all of the home-grown queuing, retry, and pub/sub logic between components. Now, all of our writes are async, forcing us to learn to deal without getting return codes or statuses from our calls. It took some getting used to, but is liberating in the end.

Our load testing shows that the system is now resilient to peak loads. The message queues swell temporarily, but everything continues to function.